Luca Schmidtke1, Athanasios Vlontzos1, Simon Ellershaw1, Anna Lukens,3 Tomoki Arichi2 and Bernhard Kainz1

1Imperial College London, 2 King’s College London, 3 Evelina Children’s Hospital

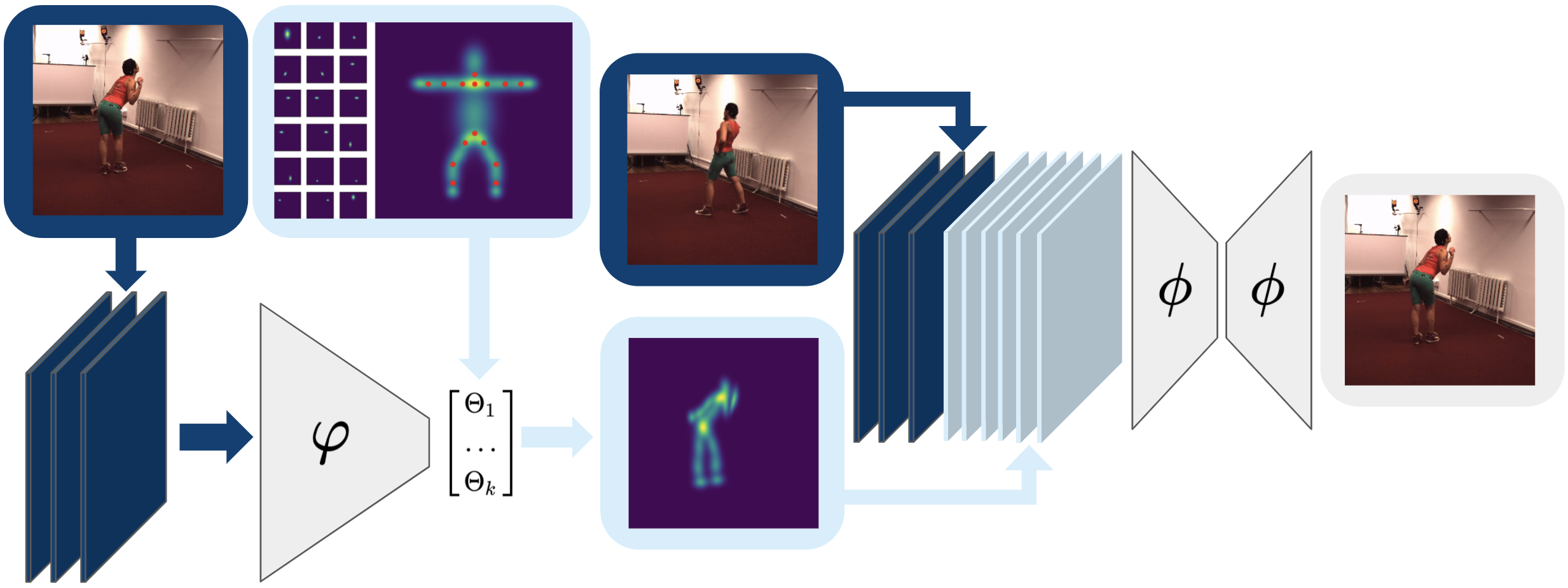

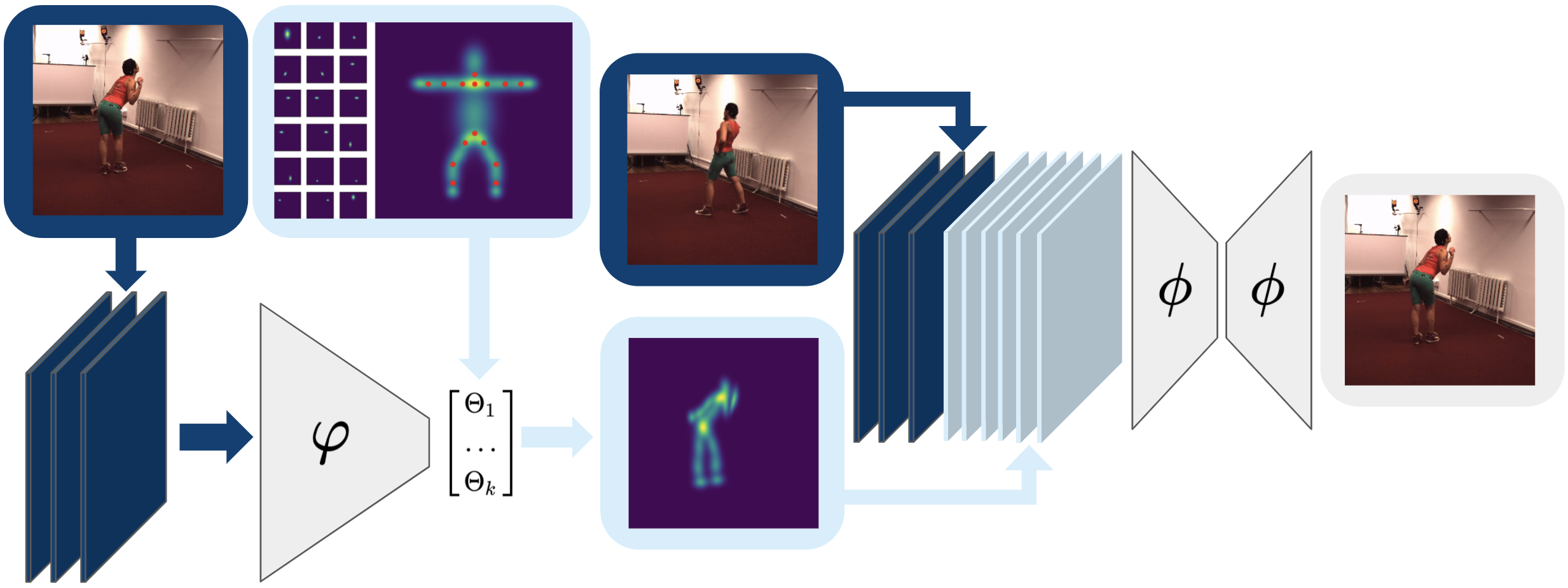

Human pose estimation is a major computer vision problem with applications ranging from augmented reality and video capture to surveillance and movement tracking. In the medical context, the latter may be an important biomarker for neurological impairments in infants. Whilst many methods exist, their application has been limited by the need for well annotated large datasets and the inability to generalize to humans of different shapes and body compositions, e.g. children and infants. In this paper we present a novel method for learning pose estimators for human adults and infants in an unsupervised fashion. We approach this as a learnable template matching problem facilitated by deep feature extractors. Human-interpretable landmarks are estimated by transforming a template consisting of predefined body parts that are characterized by 2D Gaussian distributions. Enforcing a connectivity prior guides our model to meaningful human shape representations. We demonstrate the effectiveness of our approach on two different datasets including adults and infants.

@inproceedings{LSchmidtke2021,

author = {Luca Schmidtke, Athanasios Vlontzos, Simon Ellershaw, Anna Lukens, Tomoki Arichi, and Bernhard Kainz},

title = {Unsupervised Human Pose Estimation through Transforming Shape Templates},

booktitle = {Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

year = {2021},

}